Executive Summary

This research examines the use of hate speech and national slurs to dehumanise, call for violence against, and celebrate the death of Ukrainians. The data analysed indicates widespread hate speech targeting Ukrainians primarily on Twitter and Telegram. Further examination of these accounts indicates the content was further spread to YouTube and Facebook.

The research was accomplished by analysing data collected from Twitter and Telegram based on national slurs referencing Ukrainians in the context of Russia’s full-scale invasion of Ukraine. The dataset collected and analysed in the research resulted in 1585 accounts with more than 2500 interactions from Twitter, and 15655 results from more than 480 channels on Telegram.

This analysis shows that in comparison to prior years, February 2022 saw a surge in the use of national slurs and hate speech against Ukrainians on both Twitter and Telegram.

Twitter accounts that were found to be using hate speech against Ukrainians include accounts that are verified with a blue tick. Many of those accounts also post content heavily supportive of Russia, stoking divide within US audiences, targeting the LGBTQI+ community and sharing memes and messages related to conspiracy theories such as biolabs in Ukraine, QANON and issues related to Donald Trump.

The accounts analysed on Telegram include channels that took videos of Ukrainian funerals, or from Ukrainians who had visited graves, and reposted them with degrading and dehumanising comments. Other channels specifically focus on looking for dead or captured Ukrainians and celebrating the circumstances around that imagery. The content circulated in these channels was reposted on Twitter, YouTube and Facebook.

Further research indicates the increase and gravity in the use of hate speech language by Russian politicians, Russian state-linked media and pro-Russian propagandists calling for (further) violence and celebrating the death of Ukrainians.

The phrasing and language seen in the content uncovered in this research can be compared to similarities in language used in other scenarios where hate speech has incited and/or fuelled violence. Historically, hate speech has been used to justify, incite and fuel violence against groups, minorities and ethnicities such as in the Holocaust, by the Khmer Rouge in Cambodia, in Rwanda against the Tutsi people and in Myanmar against the Rohingya.

The systematic and widespread use of dehumanising language and national slurs against Ukrainians seen in this research is further fuelled through the spread of major social media platforms, who each have their own policies in place to combat this type of widespread activity.

The findings of this research help to make more informed opinions at a policy level for social media platforms in dealing with hate speech targeting Ukrainians, understanding of the terminology used on a systematic level, and outlines the clear widespread level of dehumanising language that supports Russia’s war in Ukraine.

This research on the use of hate speech and dehumanising language in reference to Ukrainians should be taken in context with the evidence of Russian forces committing acts against Ukrainians such as beheadings, castrations, ethnic cleansing, torture and the overwhelming use of military forces through the bombing of schools, hospitals, energy sites and other infrastructure as seen on the EyesonRussia.org map.

1. Keywords examined

Keywords used in this research are: Khokhol (хохол) Khokhols (хохлы), Hohol, Hohols.

The main terminology used in this research on the rise of anti-Ukrainian national slurs references khokhol, which has deep cultural roots in Ukraine and has been used increasingly by Russians since 2014.

Slurs like khokhol do not directly dehumanise Ukrainians, but the context it is used in with calls for violence and justifying violence and atrocities against Ukrainians make the terminology a part of this justification for further violence and atrocities.

Technically, khokhol refers to the characteristic hairstyle worn by Ukrainian Cossacks in the 16th and 17th centuries. Hohol is an iteration of the term. This derogatory term has been used as “a national slur used by Russians to describe Ukrainians”. In a 2017 reportfrom Facebook, the use of slurs by Russians and Ukrainians towards each other was found to have escalated since tensions significantly increased between the countries in 2014, causing Facebook to start removing content using the terminology.

The specific terminology Facebook identified as increasing was the reference to Russians by Ukrainians as “moskal” which meant “Muscovites” and the use of “khokhol” by Russians in reference to Ukrainians.

2. Introduction

In February 2022, after Russia commenced a full-scale invasion of Ukraine and subsequently targeted Ukraine’s people, communities and infrastructure, social media became the dominant place to watch the invasion and the atrocities that were left behind evolve.

Amidst the social media coverage of what has unfolded in Ukraine, so too has the weaponisation of social media platforms. According to OECD, Russian information operations have significantly increased since the 2022 full-scale invasion. In April 2022, Meta reported a surge in social media disinformation, including content linked to Russia’s invasion of Ukraine.

The surge of disinformation online has also been met with the increase in searching, viewing and use of hate speech by Russians, as well as Ukrainians.

However, as this report indicates, the hate speech terminology used against Ukrainians indicates an effort to incite violence by calling for Ukrainian deaths, celebrating events where civilians have been killed and the use of language to dehumanise Ukrainians.

According to the United Nations Strategy and Plan of Action on Hate Speech, hate speech is described as:

“Any kind of communication in speech, writing or behaviour, that attacks or uses pejorative or discriminatory language with reference to a person or a group on the basis of who they are, in other words, based on their religion, ethnicity, nationality, race, colour, descent, gender or other identity factor”.

International Association of Genocide Scholars research states:

“Hate speech regularly, if not inevitably, precedes and accompanies ethnic conflicts, and particularly genocidal violence. Without such incitement to hatred and the exacerbation of xenophobic, anti-Semitic, or racist tendencies, no genocide would be possible and persecutory campaigns would rarely meet with a sympathetic response in the general public.”

The type of language and wording used by the Russian politicians, its state-linked media outlets, and the accounts and posts seen in this research indicate a number of strategies: namely to dehumanise Ukrainians by referring to Ukrainians in derogatory slurs, to celebrate their deaths (as is evidenced in the data analysis of this research), to fuel further violence against Ukrainians and justify it.

This strategic effort has been stated clearly by the Deputy Chair of the Security Council of the Russian Federation, Dmitry Medvedev, on 8 April 2023, in a tweet on “Why Ukraine will disappear” – the long tweet finished with the line: “Nobody on this planet needs such a Ukraine. That’s why it will disappear”. The tweet indicates the connection between this not just being violence against Ukrainians, but with a further goal of destroying Ukraine at a state level.

Figure: Screenshot from Twitter.

3. Looking back: how hate speech has fuelled genocide

Hate speech has been used in a number of contexts to incite violence. Historically there are numerous examples where hate speech fuelled or incited harm.

The United Nations outlines historical precedents showing how hate speech can be a precursor to atrocity crimes in cases such as:

In the Holocaust, independent media was replaced and state media disseminated hate speech, antisemitic remarks and disinformation to justify atrocities.

In Cambodia, the Khmer Rouge used propaganda to label minorities and dissenters as enemies.

In the Srebenicia genocide, nationalist propaganda illustrated Bosnian Muslim population and other groups as enemies against the Serbs.

In Myanmar, a campaign of hate and propaganda was waged by the Myanmar government against the Rohingya Muslim minority in the lead up to a scorched earth campaign against the Rohingya people.

Specific examples of the danger of hate speech against certain groups of people can also be seen in the case of Rwanda, where the Hutu ethnic majority hunted and killed Tutsi ethnic minorities.

Radio stations had incited Hutus against Tutsi minorities, referring to Tutsis in derogatory dehumanising terms. Radio broadcaster RTLM encouraged Hutus to “cut down tall trees” in reference to the Tutsis due to their taller appearance.

The founding members of the RTLM radio station were sentenced to life in prison for charges relating to genocide under the International Criminal Tribunal for Rwanda. This was for their role in helping the broadcast of information and propaganda that fuelled the killings and hatred towards Rwandans, specifically Tutsis.

The case in Rwanda primarily relied on Rwandan radio to broadcast hate speech dehumanising Tutsi ethnics. This was between 1993 and 1994 and is a stark contrast to the availability of messaging to broadcast since Russia’s full-scale invasion of Ukraine in 2022.

The derogatory references targeting Ukrainians in this circumstance is far more widespread internationally, as seen in the findings of this research.

4. Use of hate speech targeting Ukrainians

This report primarily focuses on the use of online hate speech against Ukrainians by looking at the use of the words khokhol, hohol and its iterations and the increase in that activity largely since February 2022, but reaching as far back as 2014.

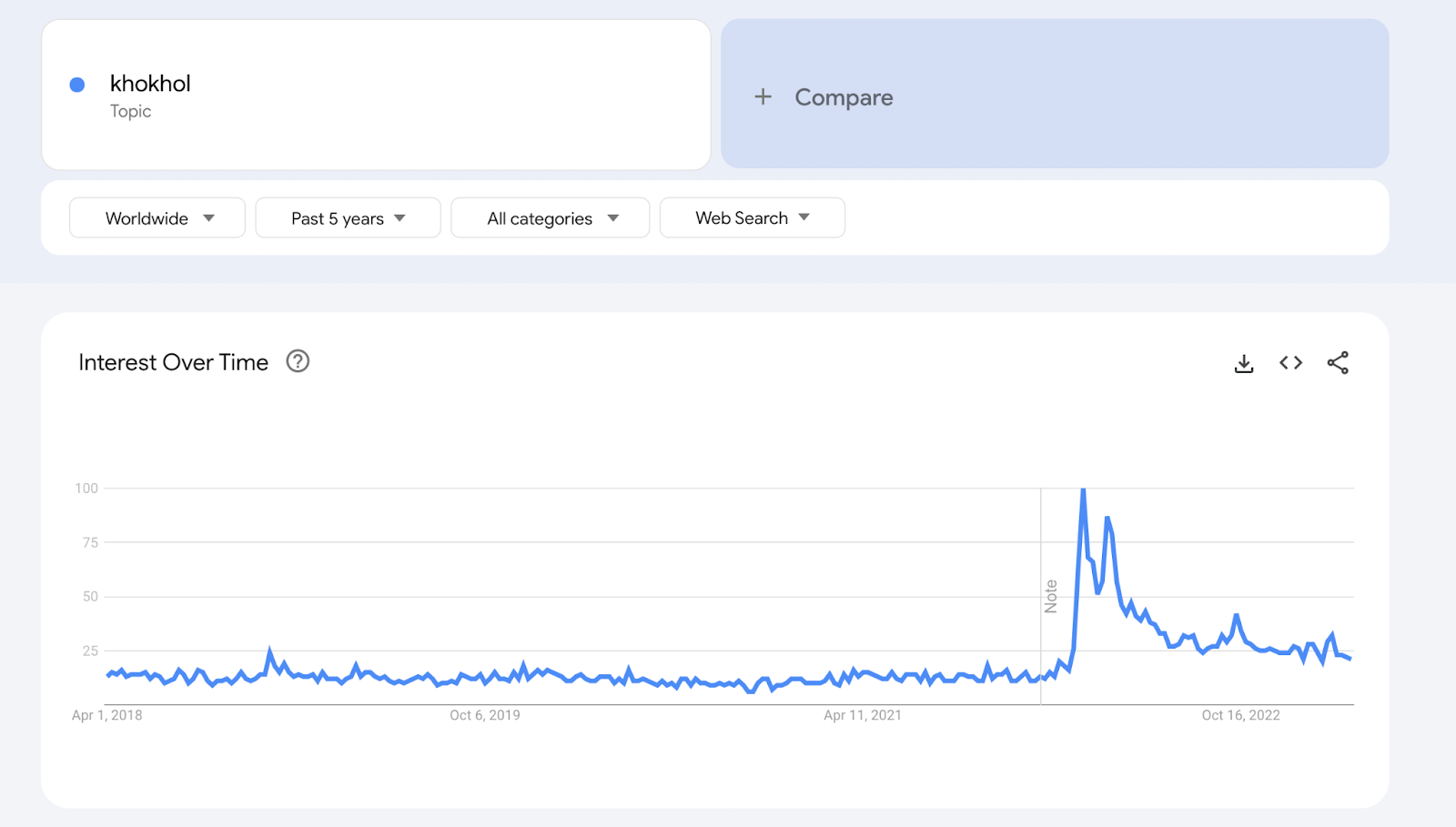

Indications of the increase in the prominence of this type of wording targeting Ukrainians can be seen in Google search statistics (seen below), which indicate a clear increase in searches for the topic khokhol since late-February 2022, around the same time the full-scale invasion of Ukraine commenced.

Figure: Google Trends statistics worldwide on the topic of Khokhol over the past five years. Specific peaks in the search results are seen at February 25, 2022 – March 5 2022.

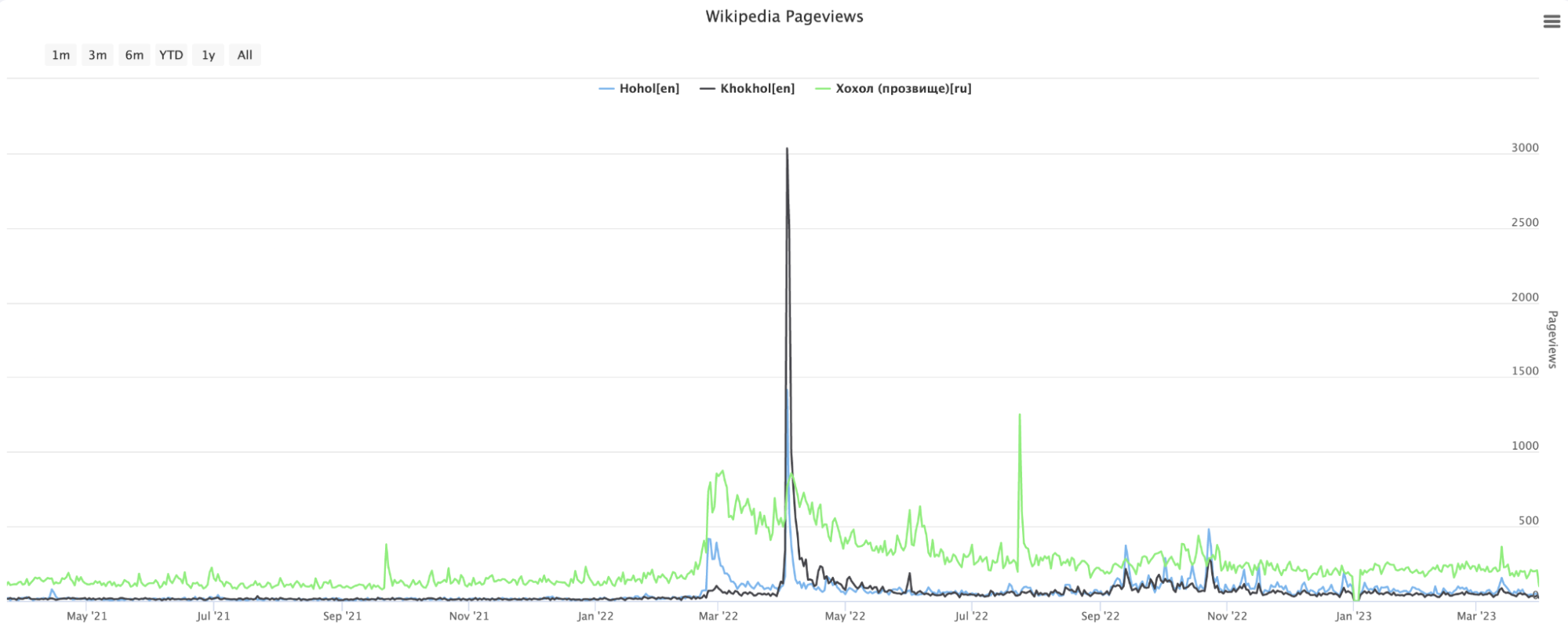

Further indications are derived from the trends of Wikipedia page traffic and trends as assessed through the WikiShark research tool. In the image below, the tool was used to view traffic data to the English pages on the topics of Hohol, Khokhol and the Russian page хохол.

Figure: Wikipedia page traffic statistics on the terms Hohol, Khokhol and the Russian page хохол since May 2021. The largest spike, seen in black for the term khokhol, was on April 4 2022. Source: wikishark.com.

The trends show a clear correlation between the terminology and the February 2022 full-scale invasion of Ukraine by Russia. However, they only show traffic data. This report’s main findings indicate a clear effort to use the terminology to target and call for violence against Ukrainians.

The wording has been well documented in its use by Russians as a slur referring to Ukrainians. And while platforms have previously attempted to mitigate its use, the data collected in this research shows that it still remains widespread.

In 2015, Facebook blocked Russian bloggers for using the wording, but Russian Government representatives responded and urged Russians to migrate to other social media platforms. As an indication of the widespread use of the terminology, Ukrainian YouTuber Andrei Chekhmenok (Андрей Чехменок) ran a social experiment on online video chat website Chatroulette in 2016. In the experiment, there was a repeated use of the term khokhol and other forms of hate speech as a slur.

A similar instance was reportedly seen on VideochatRU where a Russian man encountered a Ukrainian on video chat and the man said to a girl sitting next to him: “Look, hohol. Shoot hohol, kill. Kill hohol… More… and a control shot in the head”.

Post-full-scale invasion of Ukraine in 2022, the use of this hate-based wording has surged, especially in reference to aggressions against Ukraine and Ukrainians.

For example, former Komsomolskaya Pravda Russian propagandist Sergei Mardan (Сергей Мардан) has made numerous comments calling for the killing of Ukrainians and for Ukraine’s “liquidation”. This phrasing targets the state, in addition to the calls for violence against Ukrainians. In one specific segment of a video filmed in October 2022, he talks about drones deployed by Russia to “fly and land on the hohols heads”.

Figure: Russian propagandist Sergei Mardan making comments in relation to the use of Iranian drones in Ukraine. Translated by Francis Scarr.

On Russian state-funded media RT, former director of broadcasting Anton Krasovsky (Антон Красовский) used racial slurs in reference to Ukrainians while he suggested drowning Ukrainian children.

Figure: RT state-funded media broadcast of Anton Krasovsky using slurs to dehumanise Ukrainians and suggesting Ukrainian children to be drowned. Translated by Julia Davis.

The same resentments are echoed by members of the Russian forces. In one example, documented by The New York Times, an intercepted phone callreveals a Russian soldier’s wife asking for trophies of khokhols he has killed while in Ukraine, such as tunics, patches, names and boots.

Soldiers have also openly posted online using the similar devaluing wording in reference to Ukrainians. For example, Russian soldier Viktor Bulatov (Виктор Булатов) was reported to have written on social media that the Russian military should “arrange a Bucha in every khokhol city from Kharkiv to Lviv”. The reference to Bucha specifically refers to the activities during the Russian occupation of the area west of Kyiv in March 2022, when Russian forces tortured and executed numerous Ukrainian civilians.

The culture of the use of hate speech against Ukrainians is widespread. For example, a Ukrainian soldier that received racially abusive messages told the BBC“In the texts that I received, they called me a ‘bloody khokhol’ and threatened to find my mum and sister in Nova Kahovka [a town in occupied Kherson at the time] and rape them”. In other examples, YouTube channels use the term khokhol in video game simulations reenacting Russia’s invasion of Ukraine titled ‘Great Khokhol Slaughter of ‘22’ and brandishes Russian military and Z symbols. Another video shows captured Ukrainian soldiers, titled ‘Russian Soldiers Detained Another Ukrainian Khokhol Band’.

Figure: Left shows the video game simulator ARMA3 reenacting the war in Ukraine. Right: captured Ukrainian soldiers. Both images contain wording indicated to be hate speech against Ukrainians.

5. Platform policies on hate speech

Most large social media platforms have rules and policies governing the use of their platforms to curb illicit, inauthentic and harmful behaviour. Specifically, most of them contain clear guidelines put in place to combat incitement to violence and hate speech.

Twitter updated its policies on Hateful Conduct in February 2023. It reads: “you may not directly attack other people on the basis of race, ethnicity, national origin, caste, sexual orientation, gender, gender identity, religious affiliation, age, disability, or serious disease.”

Twitter further details violations of the Hateful Conduct policy to include hateful references, incitement, slurs and tropes, dehumanisation. It states that if an account is found to have violated the policy Twitter will “take action against behaviour that targets individuals or an entire protected category with hateful conduct”.

Meta has a hate speech policy stating they “don’t allow hate speech on Facebook. It creates an environment of intimidation and exclusion, and in some cases may promote offline violence.”

Meta’s policy page defines its stance to hate speech as: “a direct attack against people – rather than concepts or institutions – on the basis of what we call protected characteristics: race, ethnicity, national origin, disability, religious affiliation, caste, sexual orientation, sex, gender identity and serious disease. We define attacks as violent or dehumanising speech, harmful stereotypes, statements of inferiority, expressions of contempt, disgust or dismissal, cursing and calls for exclusion or segregation.”

Google also uses a very similar hate speech policy as Meta and Twitter. In reference to YouTube, it says they “remove content promoting violence or hatred against individuals or groups” and describe a list of attributes. Relevant to this research the attributes are: ethnicity, nationality, victims of a major violent event and their kin.

Telegram’s terms of service state that users agree not to promote violence on publicly viewable Telegram channels. In one tweet, Telegram’s founder, Pavel Durov, states that the rules of Telegram “prohibit calls for violence and hate speech”.

6. Method of research

Data was collected from Twitter and Telegram in three phases for the purpose of this research:

Collection

Visualisation

Analysis

6.1 Collection

The keywords collected were: Khokhol, хохол, Khokhols, хохлы, Hohol and Hohols. The words are the same, but there are different iterations in plural, singular and differing versions.

Based on keywords, data was automatically collected from Twitter using the Twitter API and the Twitter Streaming Importer. This was done between February and April 2023. The dataset resulted in 1585 accounts with more than 2500 interactions.

It should be noted that while the majority of the data collected in this phase referred to content clearly used to dehumanise Ukrainians, some of the tweets collected were outlining the definition of the terminology by those seeking to educate and inform audiences on the usage of the language.

Based on keywords, data was automatically collected from more than 480 Telegram groups which were a mix of pro-Russian channels, channels that posted about Russia’s war on Ukraine and poted related content in Russian and English. Only text was collected in messages. The data was collected within the timeframe of February 2021 to April 2023. The dataset resulted in 15655 results from more than 480 channels.

6.2 Visualisation

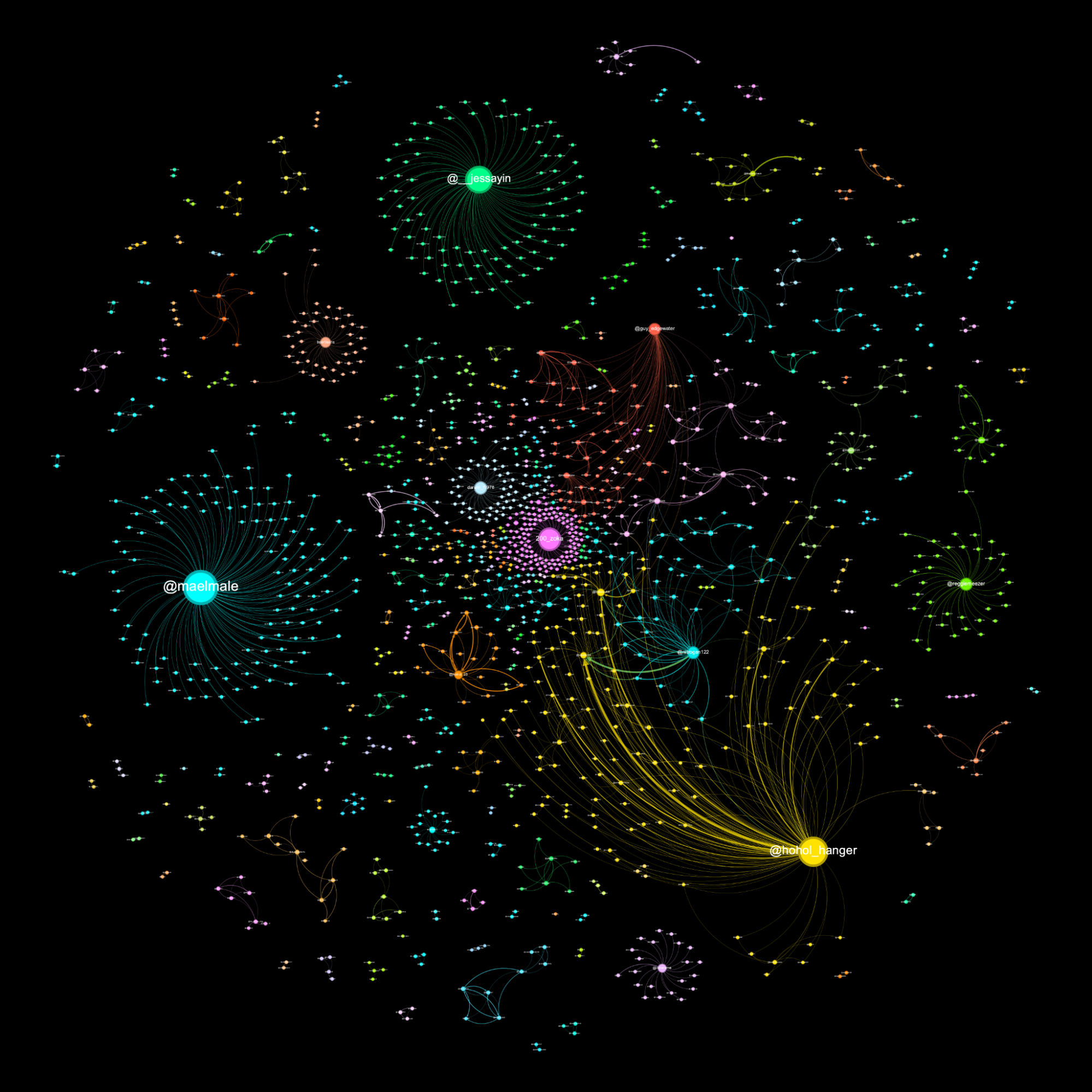

Data from Twitter was visualised in Gephi to construct a retweet network where each node in the graph represents a Twitter account and the edges (the lines between them) represent retweets. To further analyse the nodes, they were segmented into communities using Gephi and its cluster analysis. Nodes were automatically sized based upon the prominence of the number of interactions.

The data from Telegram was visualised in a chronological timeline based on the terms used in messages each day.

6.3 Analysis

Data was analysed on both Twitter and Telegram search functions to identify further content using the same keywords as seen above that were posted since the full-scale invasion of Ukraine in February 2022. Other social media platforms, such as YouTube, were utilised to discover the content for the purpose of this research.

It should be noted that VK was not included in this research, as this research was primarily focussed on platforms widely used in western countries.

7. Findings of data analysis on Twitter and Telegram

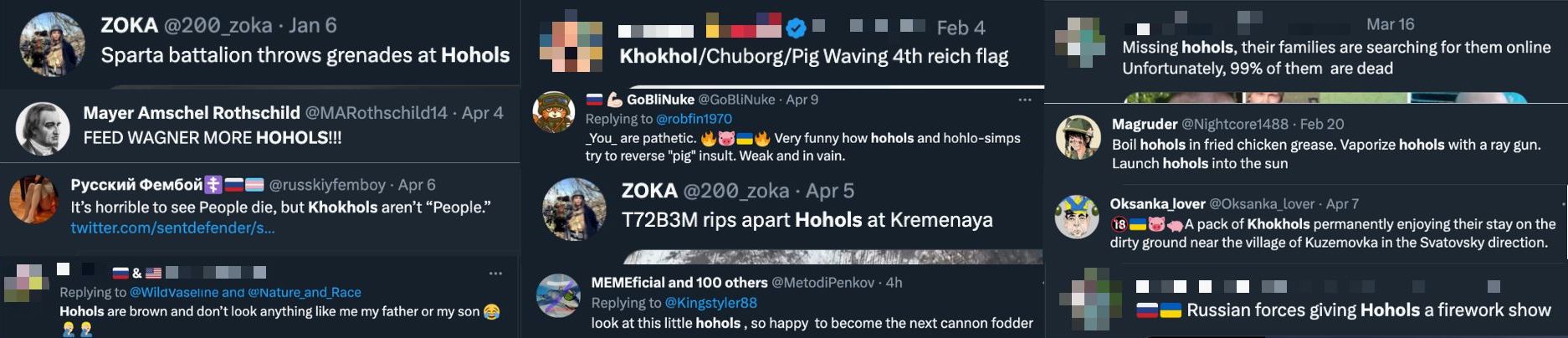

The findings contained in this section show the widespread use of hate speech and national ethnic slurs against Ukrainians by pro-Russian accounts on Twitter and Telegram.

7.1 Visualising the spread of hate speech on Twitter

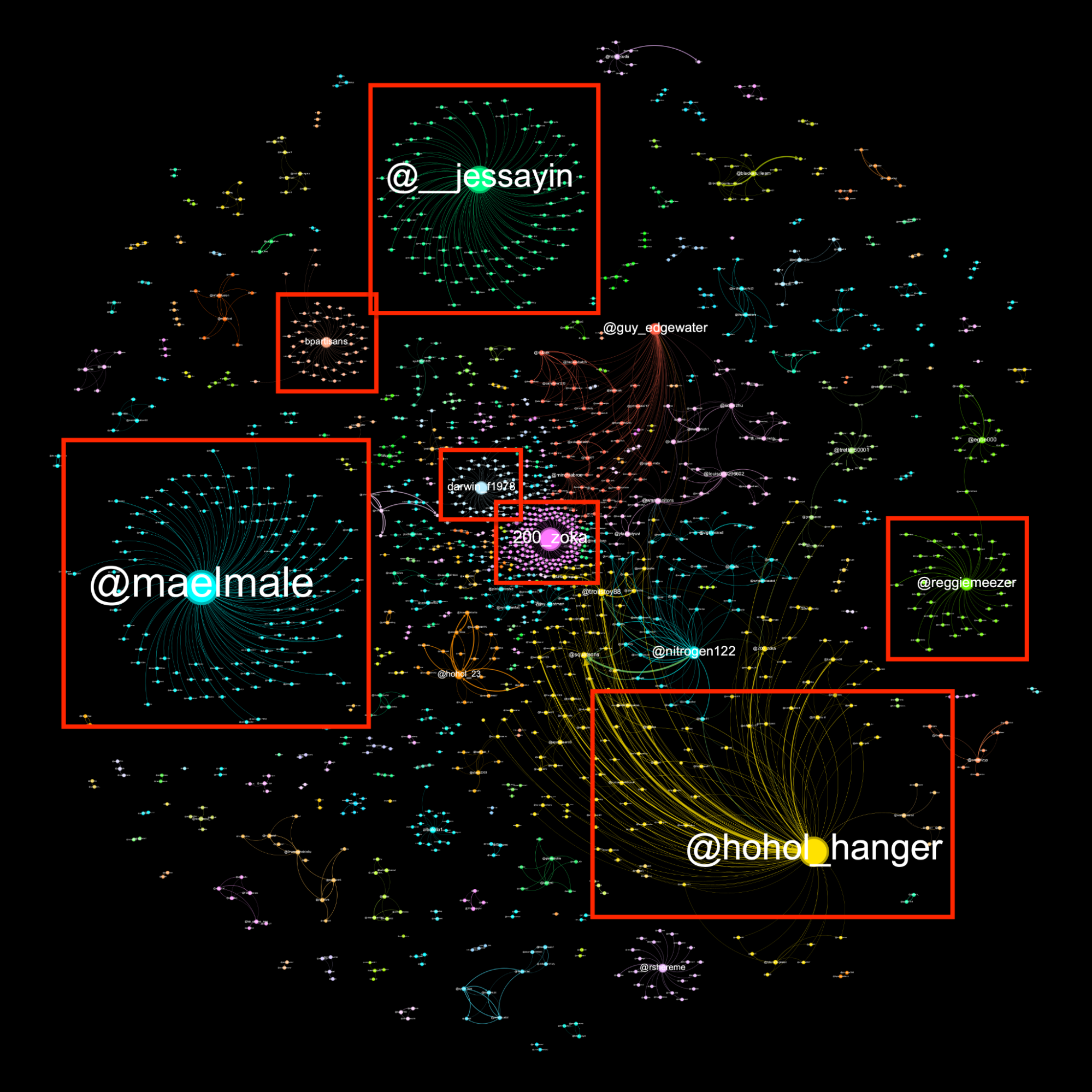

Foremost to this research, the network of accounts using national slurs in reference to Ukrainians are not linked but rather operate in separate networks disconnected from each other. A full visualisation of the networks of those accounts on Twitter is seen below.

Figure: A network diagram created in Gephi of the accounts collected using the Twitter API and other collection methods between February and April 2023 using the hate speech terminology. A more detailed breakdown of the clusters and the names of the larger nodes are seen explained further in this research.

Many of the accounts collected in this research indicate the preference to use inauthentic accounts, using a fake name and other traits that make it hard to identify the person behind the account. However, posting times, language, domains and other attributes analysed gave indications as to regions or countries where those accounts are from, such as for example posting within Eastern Time zone day hours, possibly indicating a US presence.

Some accounts, however, reveal their own personal details through links to employment, home address and other aspects. For the purpose of this research, those details will not be publicly disclosed.

While more details are provided further in this research, looking in-depth at some of the main accounts behaviours, content, and wording, an overview below shows the spread and type of language being used along with the ethnic hate speech.

Figure: Screenshots of just some of the tweets identified in this research using racially motivated hate speech and wording it is used in conjunction with, in reference to Ukrainians, since June 2022.

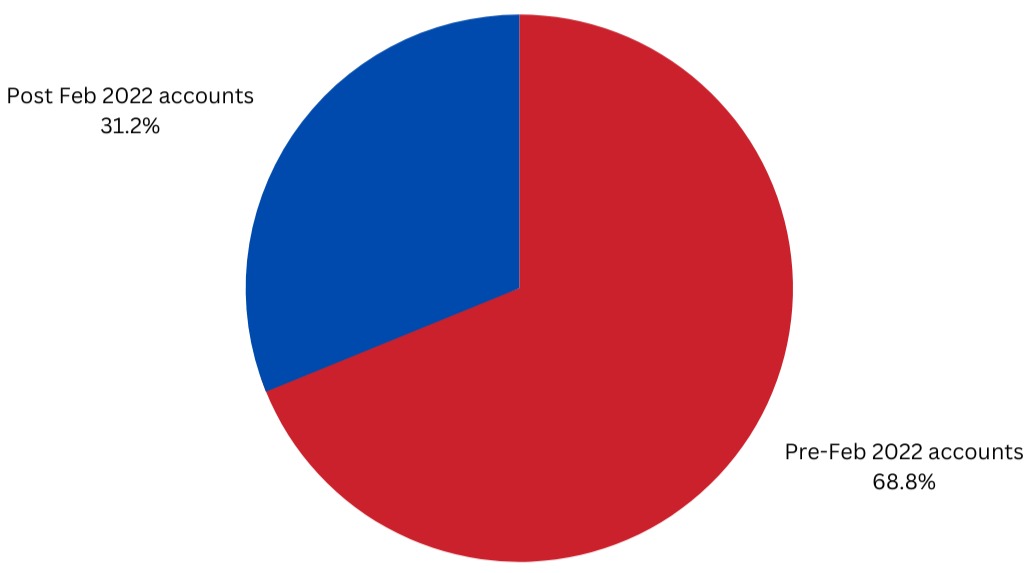

While many of the accounts have separate creation dates, just over 31 per cent of the total number of accounts were created after 1 February 2022, indicating a surge in creation of accounts since the full-scale invasion of Ukraine.

Figure: Of the 1585 accounts that posted using the keywords between February and April 2023, just over 490 of the accounts were created after 1 February 2022.

Of the accounts analysed, there appear to be several main accounts with the largest impact and reach. These are seen as larger nodes on the network graph due to their relevance in the network through retweets, authority and followers.

It was identified in early stages that some of the accounts that appear to have an impact were defining anti-Ukrainian hate speech, rather than actually using it. However, these were only a small number of nodes within the network.

Figure: Accounts that were identified as contributing towards the use of hate speech against Ukrainians on Twitter between February and April 2023. Retweeter networks are seen coloured independently for each cluster. The stronger lines between them (edges) indicate the retweets and how much amplification that retweet caused.

7.2 Analysis of main hate speech spreader accounts on Twitter

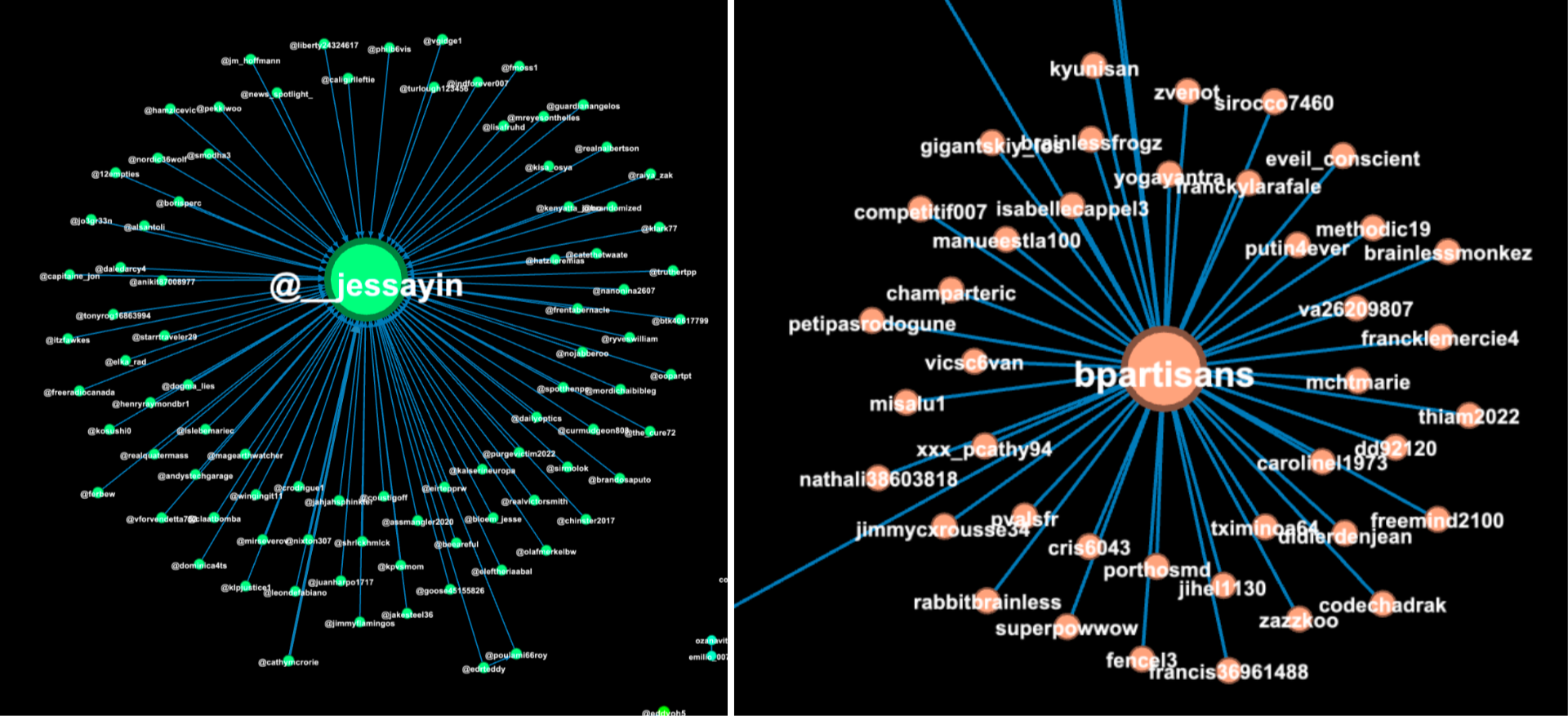

Of the several accounts identified above, at least two appear to be verified accounts: @__jessayin and @BPartisans. As of April 2023, it is unclear as to whether they were Twitter Blue subscribers or legacy verified accounts.

Figure: Retweeter network diagrams of @__jessayin and @BPartisans for their posts that involved hate speech content.

Both accounts appear to be very supportive of the Russian Government in their tweets, and regularly post content to dehumanise Ukrainians, support the Russian Government’s justification that the invasion is to ‘denazify’ Ukraine, and undermine the Ukrainian Government.

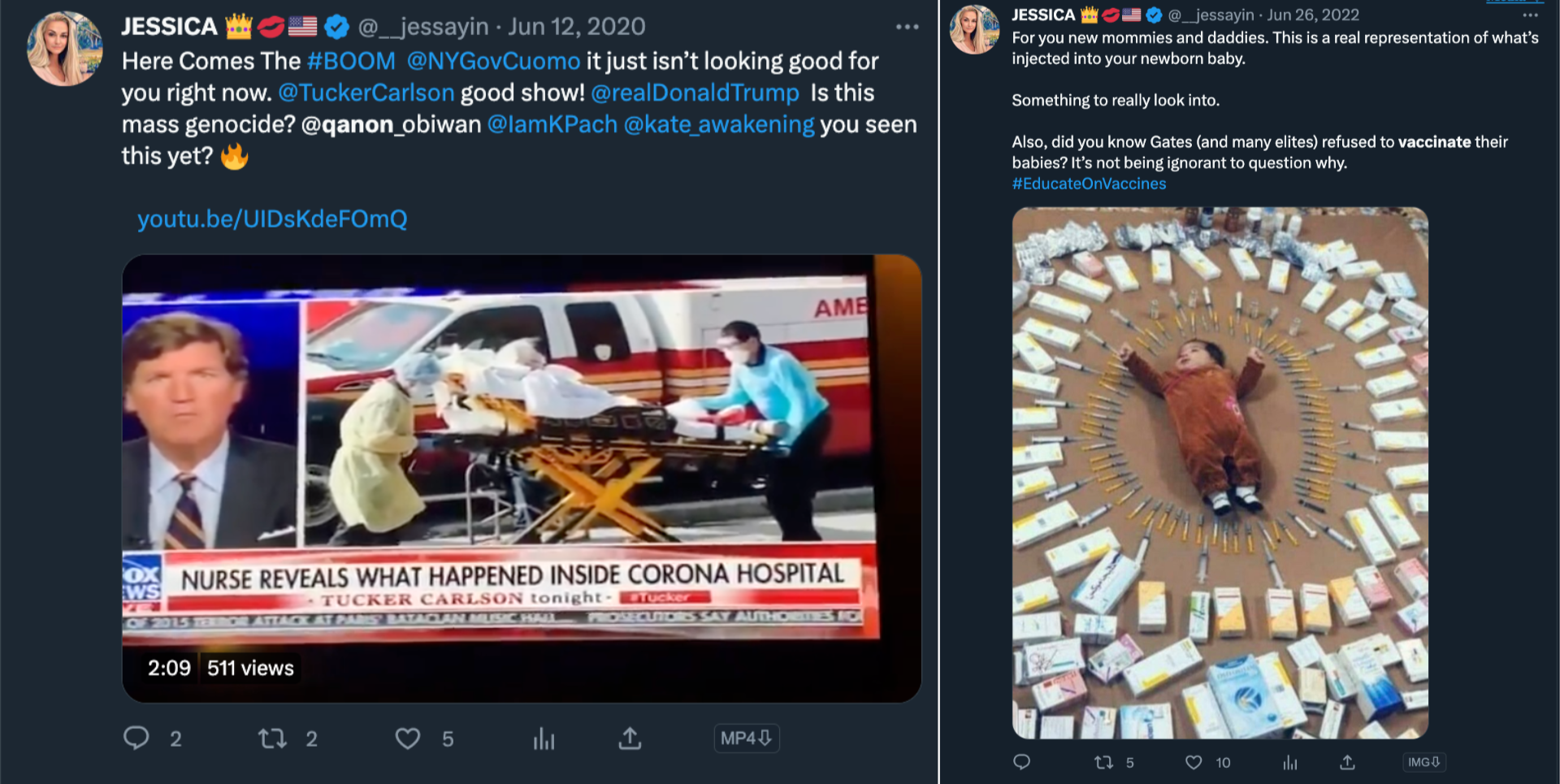

Figure: Screenshots taken from analysed accounts on Twitter.

Both accounts appear to regularly post about pro-Russian narratives and issues to stoke tensions, cause divide and justify Russia’s invasion of Ukraine. For example, subjects seen across the two accounts cover tweets negatively targeting the Transgender community, supporting Donald Trump, biolabs and others. In the examples below, both accounts posted about a Russian state-fuelled conspiracyrelated to biolabs in Ukraine.

Figure: Screenshots taken from analysed accounts on Twitter.

The account of @__jessayin was more active in posting supportive content about Trump, critically targeting transgender issues, Nord Stream conspiracies, anti-vaccination conspiracies and QANON-linked content. It should also be noted this account also links to the @_jessayin account on Donald Trump-founded platform Truth Social.

Figure: Screenshots taken from analysed accounts on Twitter.

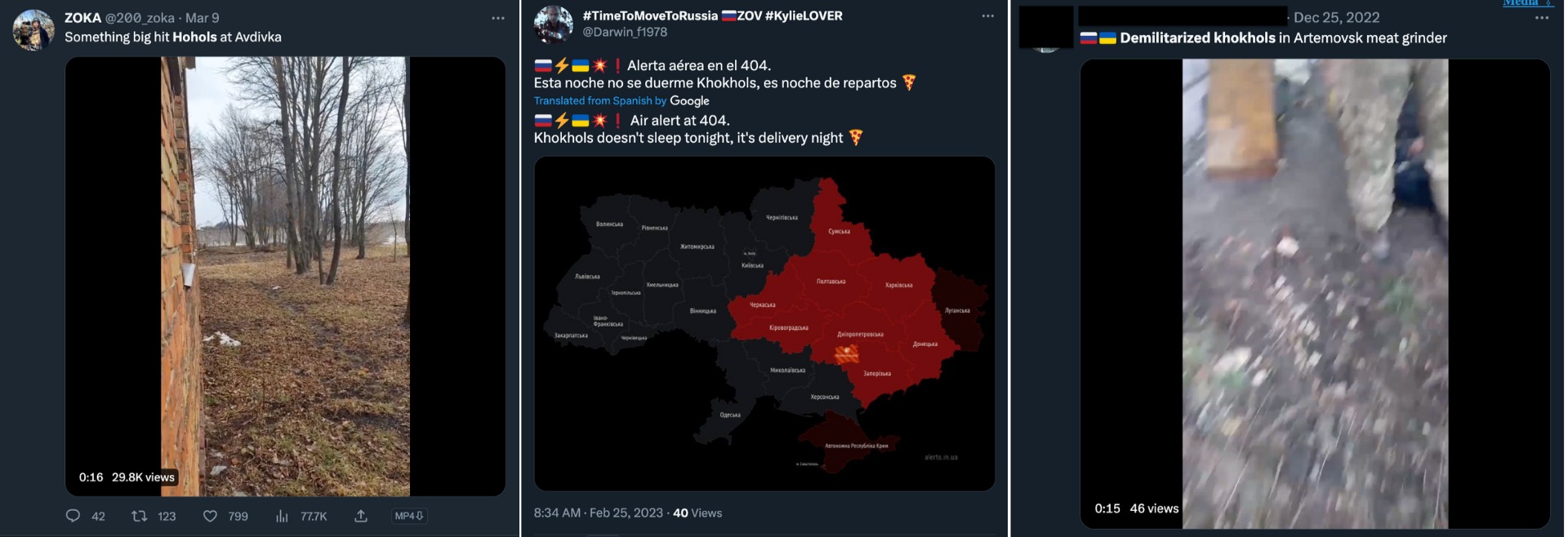

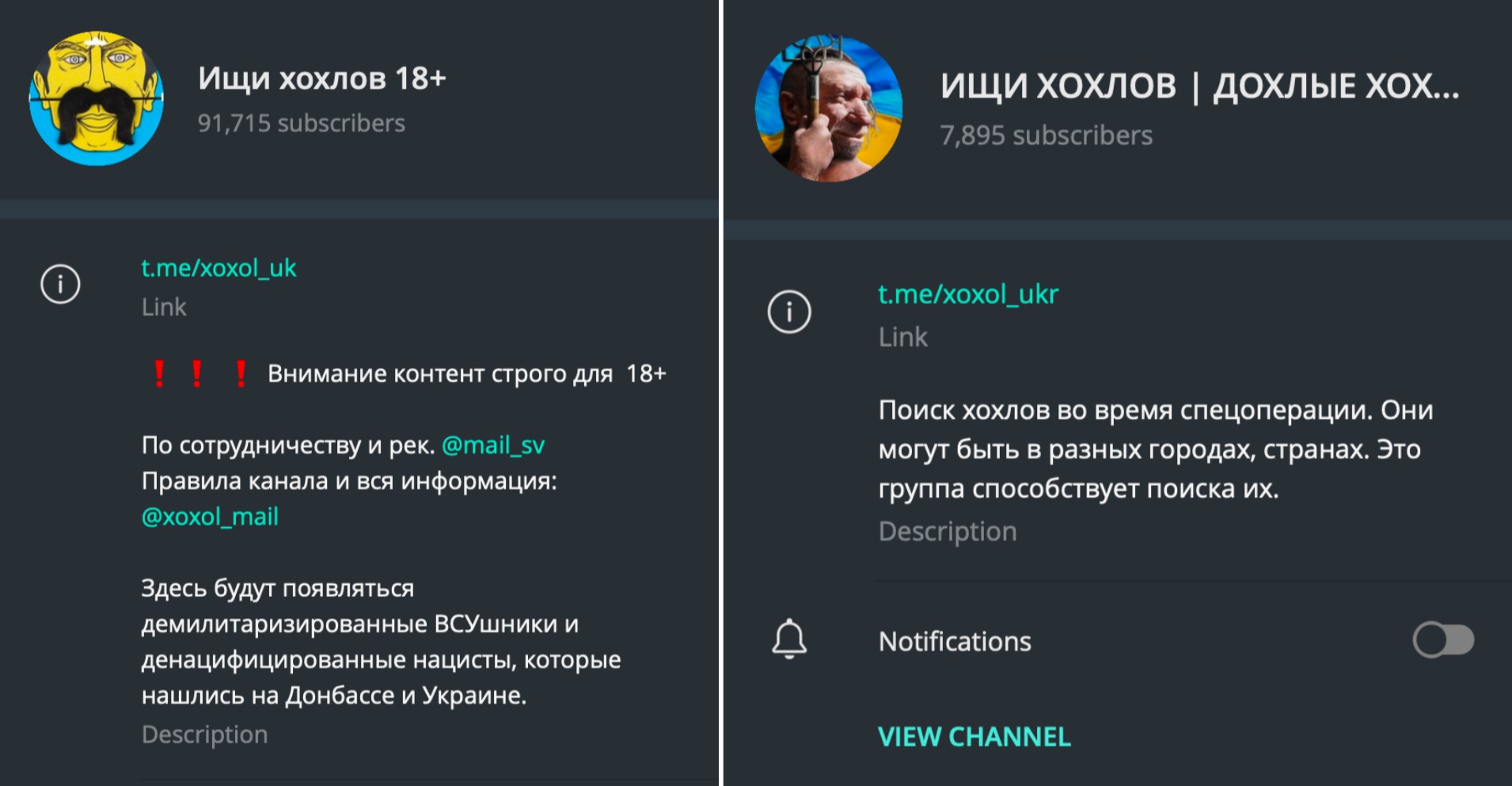

Of the accounts that posted regularly about the war in Ukraine, the ones that were retweeted the most in the network were @200_zoka, @Darwin_f1978, @maelmale and another account which appears to be the person’s real name. At the time of writing this report, another one of the main accounts (@Hohol__Hanger) collected in phase one of the data collection was suspended from Twitter, reported by activists for ‘hateful conduct’.

All of the accounts indicated signs of using regular racially abusive wording to describe Ukrainians and celebrating Ukrainian deaths.

Figure: Screenshots taken from analysed accounts on Twitter.

The account with the most content related to hate speech appears to be an army veteran in the US.

Figure: Screenshots taken from analysed accounts on Twitter.

In many cases, the hate speech language was used in conjunction with the celebration of Ukrainian deaths or denial of events such as the crimes in Bucha in March 2022.

Figure: Screenshots taken from analysed accounts on Twitter.

7.3 Tracking hate speech against Ukrainians on Telegram

During the process of investigating the Twitter accounts using hate speech, numerous posts were found to have originated on Telegram and subsequently posted on Twitter.

Due to Telegram’s less restrictive policing on language and harmful content, there is more likelihood for groups to exist targeting individuals or nationalities.

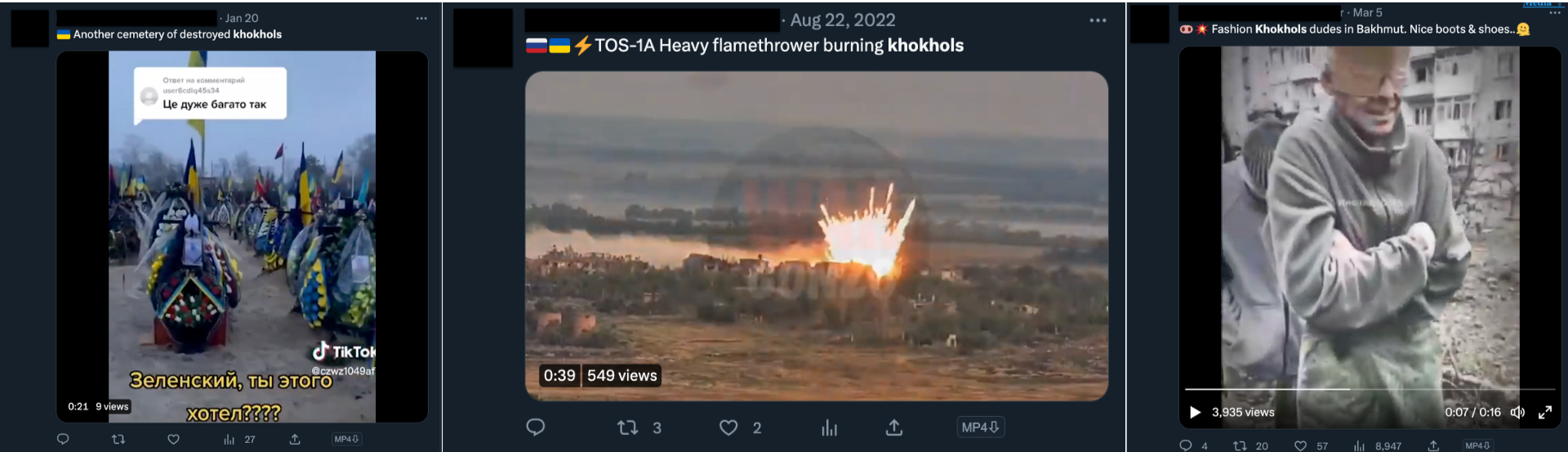

Given the high level use of Telegram in the context of the war in Ukraine, it is important to consider Telegram as a player in the facilitation of hate speech against Ukrainians. Data collected from Telegram, as seen below, indicates the significant rise in the use of the terminology since February 2021.

Figure: Messages on Telegram on followed channels that contain slurs against Ukrainians since February 2021. Significant peaks in the keyword use occurred in late April 2022 and surged again in September 2022.

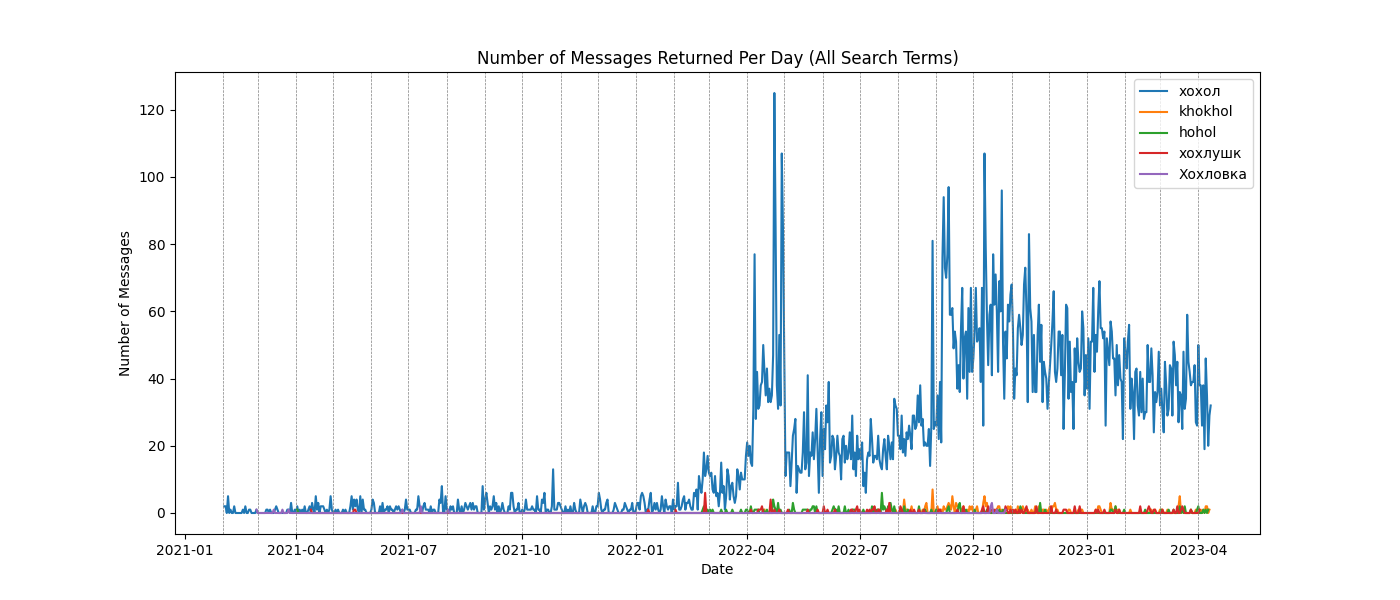

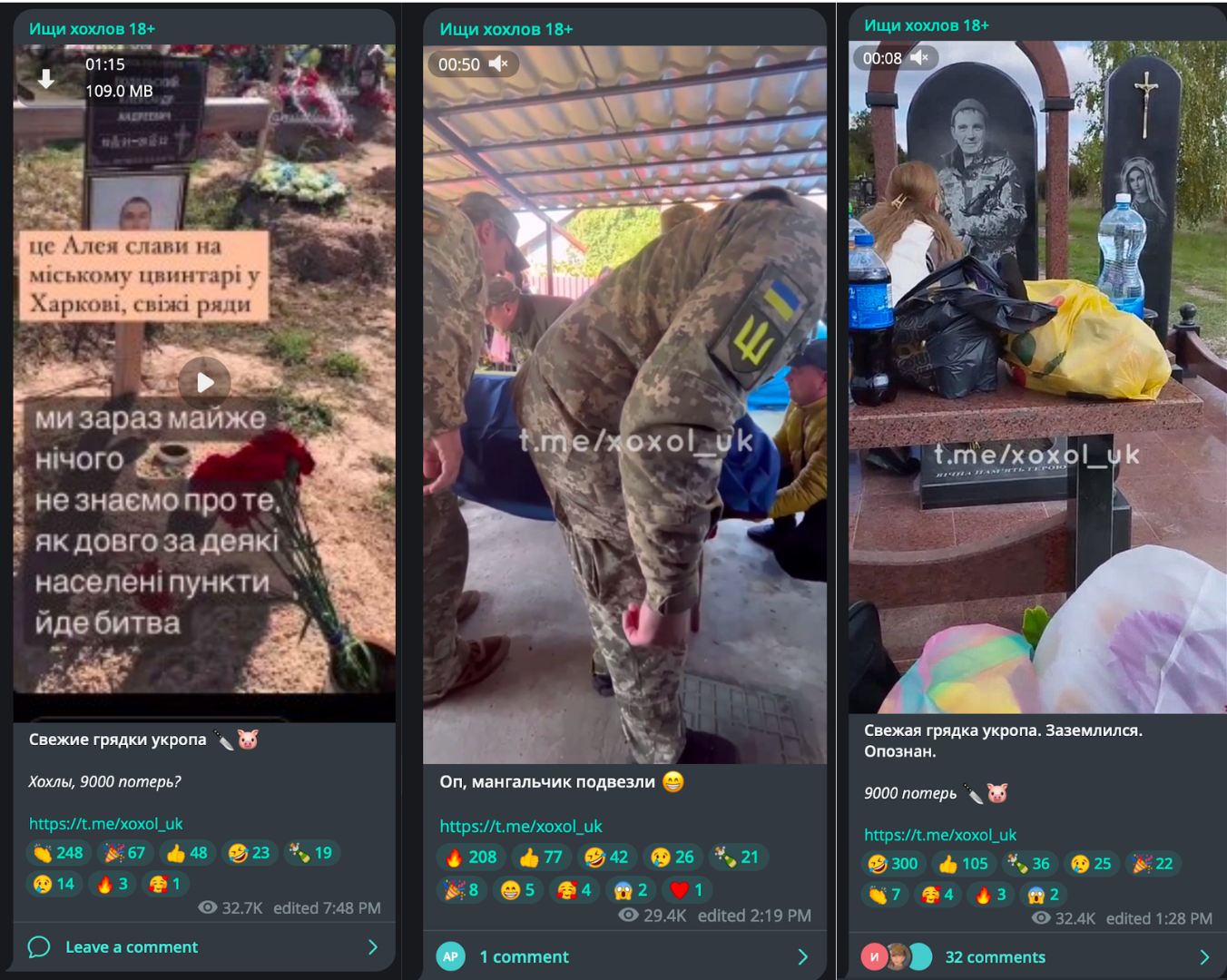

During the Twitter-based research, a number of the tweets appeared to be sharing videos from a Telegram account titled Ищи хохлов 18+ (literal translation is Look for Khokhols 18+).

A textual analysis of the entire channel reveals that the term хохол (hohol) has been mentioned more than 1000 times. Our analysis also identified a related channel titled: “Look for Khokhols, dead Khokhols, corpses of dead military of Ukraine, captive Khokhols”.

Figure: Related Channels from Telegram posting images and videos of injured or dead Ukrainian soldiers and civilians. Both channels engage in using hate speech and call for further deaths.

Both of the channels regularly post videos of either Ukrainian funerals, or Ukrainian new gravesites, and use emojis and language as slurs and attacks against Ukrainians, as seen in the images below.

Figure: Posts on Telegram celebrating deaths of Ukrainians by using their videos taken at gravesites or funerals. On the left and right screenshots is another slur (ukrop) used in reference to Ukrainians.

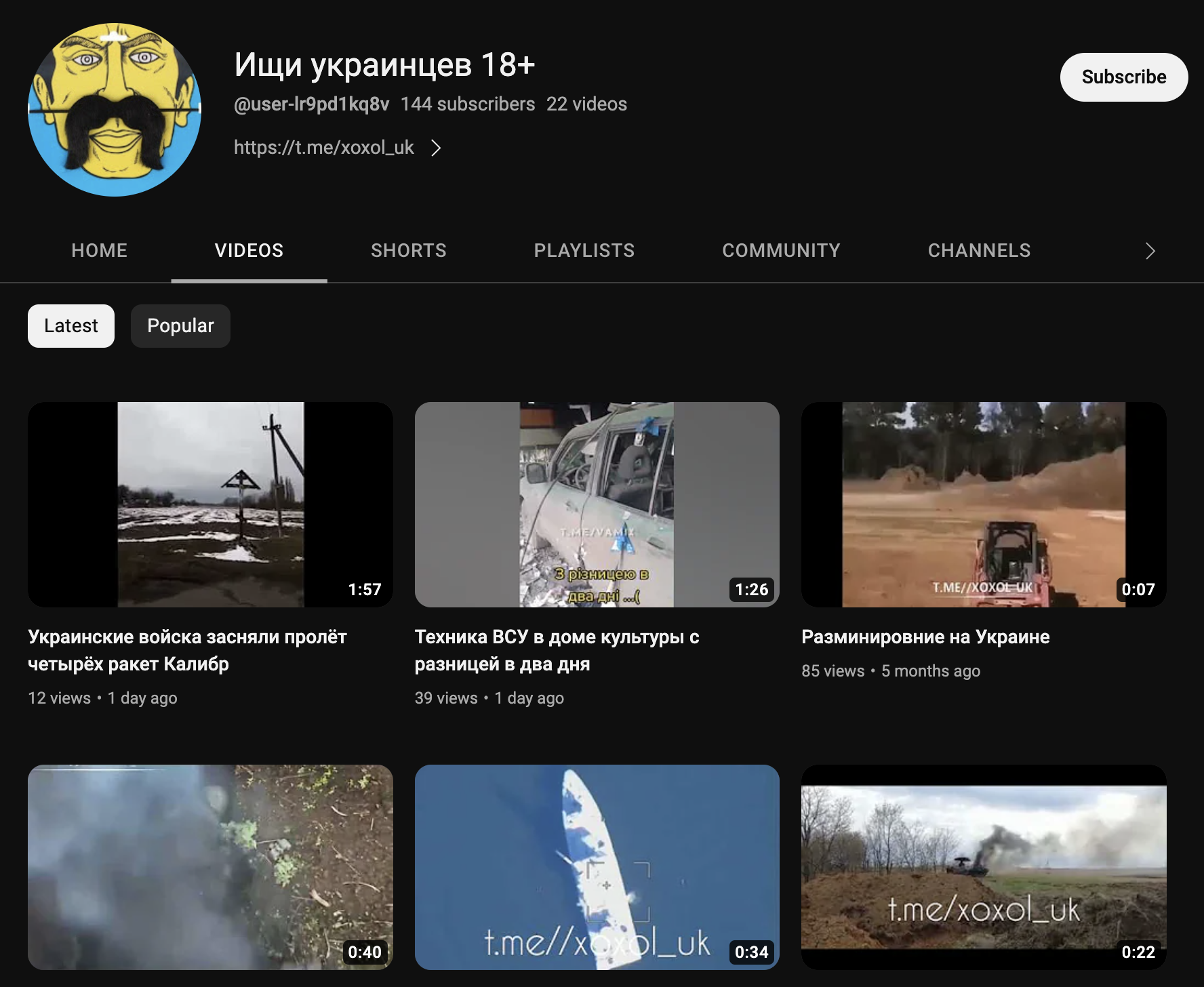

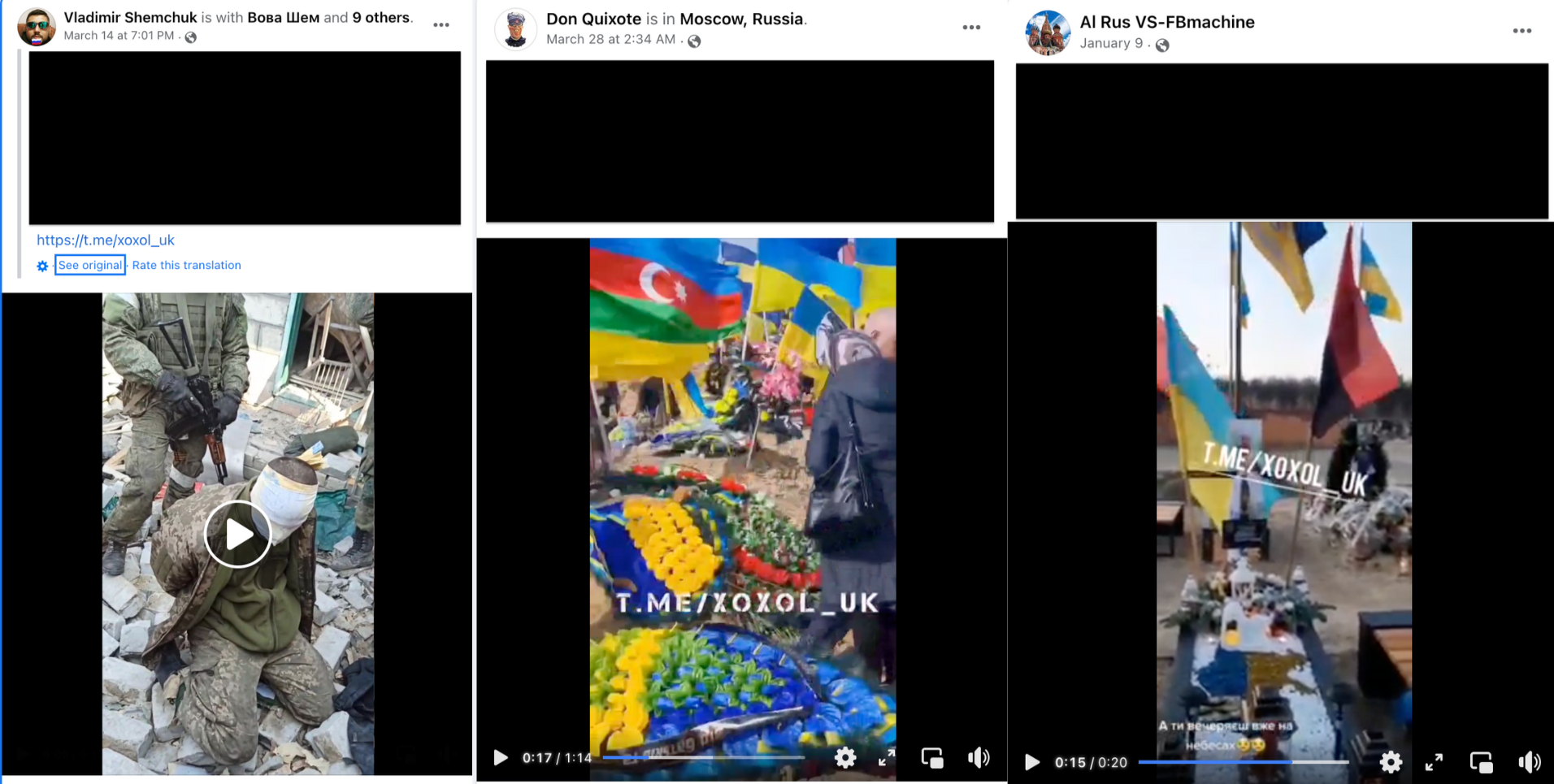

While these channels are concentrated on Telegram, they are also echoed on platforms that appear to have more stringent hate speech policies, such as YouTube and Facebook. For example, the Telegram channel appears to have a mirrored channel on YouTube under the same name, posting a number of the videos celebrating deaths of Ukrainians. A number of the videos from the Telegram channel have also been shared by anonymous YouTube accounts.

Figure: Screenshot from YouTube. Note: the channel uses a slightly different title to the Telegram channel as it says “Look for Ukrainians”.

On Facebook, the content appears to be reuploaded with numerous comments supporting the killing of Ukrainians.

Figure: Screenshots from Facebook showing the spread of anti-Ukrainian Telegram channels. Note: the video on the right shows a series of Ukrainian graves with the audio of Christmas music “jingle bells”.

7.4 Hate speech celebrating Ukrainian civilian deaths on Telegram

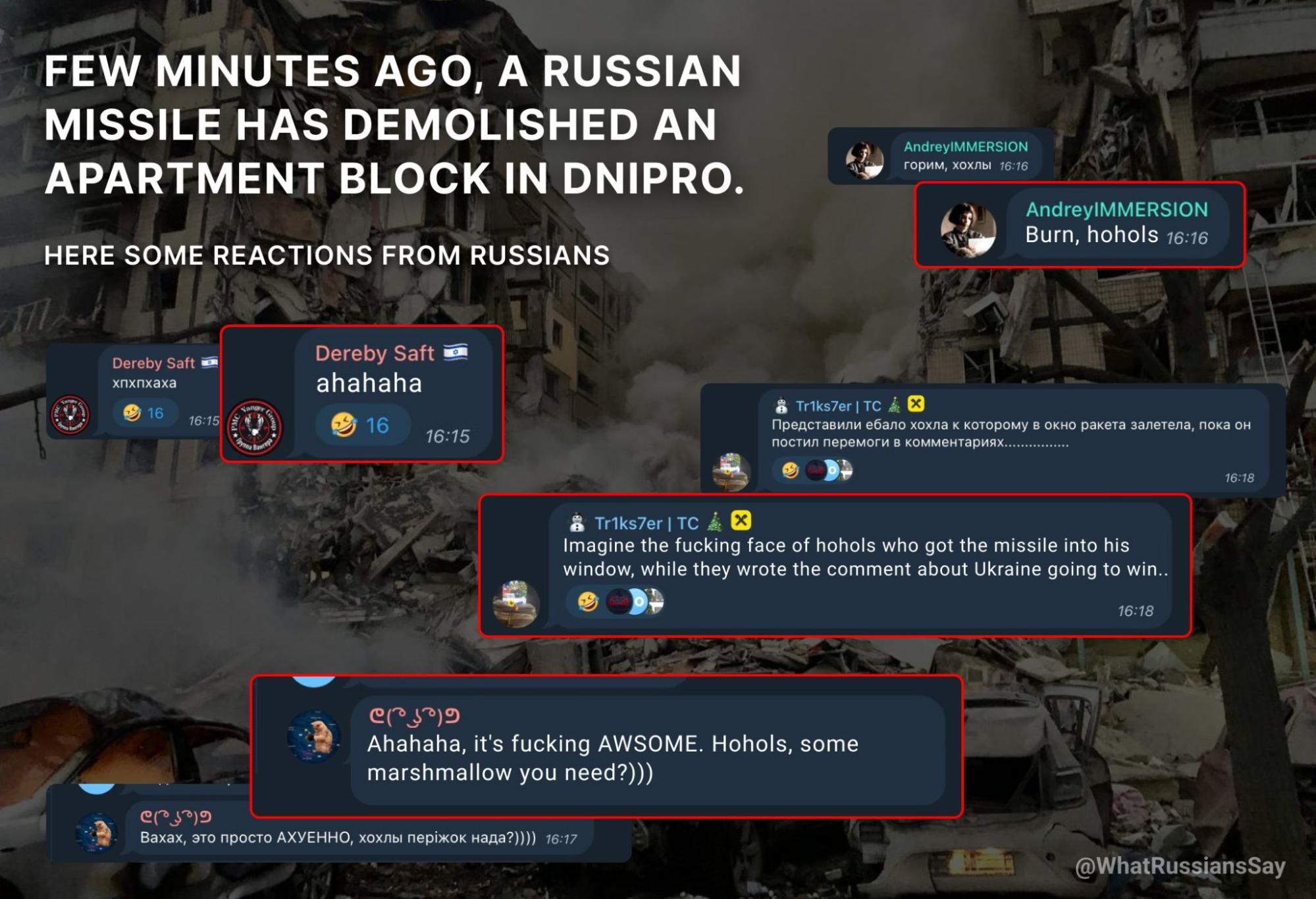

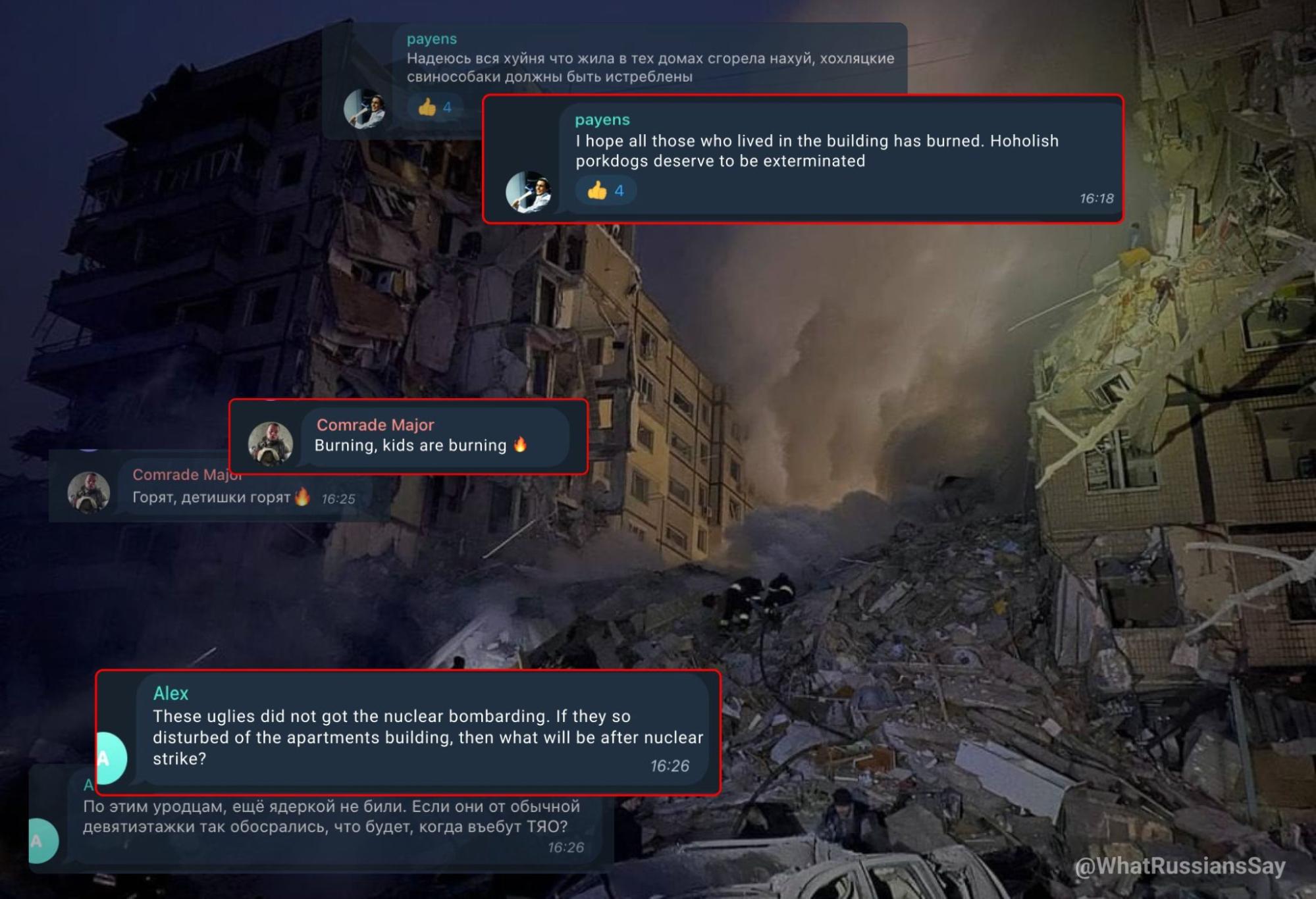

Ethnic slurs and hate speech have also been documented in wide use as comments after events where scores of Ukrainian civilians have been killed. Examples of this use of hate speech against Ukrainians, where Ukrainian civilians have been killed due to Russia’s invasion, have been continuously documented by the account @WhatRussiansSay.

One event where many of these remarks surfaced was in response to a reported Russian missile strike on an apartment building in Dnipro in January 2023, which killed more than 40 civilians.

Two images created by the account @WhatRussiansSay claim to show comments posted on Telegram in response to the deaths of Ukrainians in the strike on the apartment building. Many of the comments use hate speech terms such as hohols and other dehumanising wording.

Figure: Image posted by Twitter account @WhatRussiansSay reportedly showing responses to attack on Dnipro apartment building in January 2023.

Figure: Image posted by Twitter account @WhatRussiansSay reportedly showing responses to attack on Dnipro apartment building in January 2023.

8. Conclusion

Since 2014, hate speech, including slurs, have been on the rise to dehumanise Ukrainians and to troll and harass people online.

This research shows that hate speech has increased significantly recently, with a surge since February 2022 after Russia’s full-scale invasion of Ukraine across Twitter, Telegram, and cross-posting on YouTube and Facebook. It should be noted that VK was not included in this research, as this research was primarily focussed on platforms widely used in western countries.

The research, focussing on data collected from both Twitter and Telegram, shows that there is widespread use of slurs against Ukrainians, calling for violence and celebrating the death of Ukrainians, despite platform policies that are meant to stop, or at least mitigate this activity.

This research is aimed at supporting social media companies to make more informed opinions at a policy level.

The findings presented in this report inform platforms to deal with hate speech targeting Ukrainians and understand some of the terminology used on a systematic level. They also outline the clear widespread level of dehumanising language that supports Russia’s war in Ukraine.”

While the hate speech appears to be widespread and repeated by disconnected groups and networks, there appears to be a leading approach set by state-linked media to use hate speech to dehumanise Ukrainians and call for violence.

This wording is used in conjunction with phrases by state-linked media outlets, pro-Russian propagandists, and government representatives, that call for the killing, liquidation or disappearance of Ukrainians and Ukraine, as a nation.

There appears to be a significant attempt to conduct the same level of activity through the use of wording by the state, its proxies, and supporters of Russia on western social media platforms where policies were put in place to combat this exact type of operation.

This research on the use of hate speech and dehumanising language in reference to Ukrainians should be taken in context with the evidence of Russian forces committing acts against Ukrainian soldiers such as beheadings and castrations, as well as against Ukrainian civilians and civilian objects such as ethnic cleansing, torture and the overwhelming use of military forces through the bombing of schools, hospitals, energy sites and other infrastructure as seen on the EyesonRussia.org map.

These findings should be taken in the context of past cases where hate speech has been used as an incitement to genocide. For example, in Rwanda, where media were convicted of their role in the spread of terminology to dehumanise a certain group of people and to incite genocide.